Timeseries classification from scratch

Source:vignettes/examples/timeseries/timeseries_classification_from_scratch.Rmd

timeseries_classification_from_scratch.RmdIntroduction

This example shows how to do timeseries classification from scratch, starting from raw CSV timeseries files on disk. We demonstrate the workflow on the FordA dataset from the UCR/UEA archive.

Setup

library(keras3)

use_backend("jax")Load the data: the FordA dataset

Dataset description

The dataset we are using here is called FordA. The data comes from the UCR archive. The dataset contains 3601 training instances and another 1320 testing instances. Each timeseries corresponds to a measurement of engine noise captured by a motor sensor. For this task, the goal is to automatically detect the presence of a specific issue with the engine. The problem is a balanced binary classification task. The full description of this dataset can be found here.

Read the TSV data

We will use the FordA_TRAIN file for training and the

FordA_TEST file for testing. The simplicity of this dataset

allows us to demonstrate effectively how to use ConvNets for timeseries

classification. In this file, the first column corresponds to the

label.

get_data <- function(path) {

if(path |> startsWith("https://"))

path <- get_file(origin = path) # cache file locally

data <- readr::read_tsv(

path, col_names = FALSE,

# Each row is: one integer (the label),

# followed by 500 doubles (the timeseries)

col_types = paste0("i", strrep("d", 500))

)

y <- as.matrix(data[[1]])

x <- as.matrix(data[,-1])

dimnames(x) <- dimnames(y) <- NULL

list(x, y)

}

root_url <- "https://raw.githubusercontent.com/hfawaz/cd-diagram/master/FordA/"

c(x_train, y_train) %<-% get_data(paste0(root_url, "FordA_TRAIN.tsv"))## Downloading data from https://raw.githubusercontent.com/hfawaz/cd-diagram/master/FordA/FordA_TRAIN.tsv

##

[1m 0/20094049

[0m

[37m━━━━━━━━━━━━━━━━━━━━

[0m

[1m0s

[0m 0s/step

[1m 114688/20094049

[0m

[37m━━━━━━━━━━━━━━━━━━━━

[0m

[1m11s

[0m 1us/step

[1m 458752/20094049

[0m

[37m━━━━━━━━━━━━━━━━━━━━

[0m

[1m4s

[0m 0us/step

[1m 925696/20094049

[0m

[37m━━━━━━━━━━━━━━━━━━━━

[0m

[1m3s

[0m 0us/step

[1m 1458176/20094049

[0m

[32m━

[0m

[37m━━━━━━━━━━━━━━━━━━━

[0m

[1m2s

[0m 0us/step

[1m 1867776/20094049

[0m

[32m━

[0m

[37m━━━━━━━━━━━━━━━━━━━

[0m

[1m2s

[0m 0us/step

[1m 2375680/20094049

[0m

[32m━━

[0m

[37m━━━━━━━━━━━━━━━━━━

[0m

[1m2s

[0m 0us/step

[1m 2834432/20094049

[0m

[32m━━

[0m

[37m━━━━━━━━━━━━━━━━━━

[0m

[1m2s

[0m 0us/step

[1m 3407872/20094049

[0m

[32m━━━

[0m

[37m━━━━━━━━━━━━━━━━━

[0m

[1m2s

[0m 0us/step

[1m 4014080/20094049

[0m

[32m━━━

[0m

[37m━━━━━━━━━━━━━━━━━

[0m

[1m1s

[0m 0us/step

[1m 4751360/20094049

[0m

[32m━━━━

[0m

[37m━━━━━━━━━━━━━━━━

[0m

[1m1s

[0m 0us/step

[1m 5939200/20094049

[0m

[32m━━━━━

[0m

[37m━━━━━━━━━━━━━━━

[0m

[1m1s

[0m 0us/step

[1m 7675904/20094049

[0m

[32m━━━━━━━

[0m

[37m━━━━━━━━━━━━━

[0m

[1m1s

[0m 0us/step

[1m 9625600/20094049

[0m

[32m━━━━━━━━━

[0m

[37m━━━━━━━━━━━

[0m

[1m0s

[0m 0us/step

[1m11698176/20094049

[0m

[32m━━━━━━━━━━━

[0m

[37m━━━━━━━━━

[0m

[1m0s

[0m 0us/step

[1m13975552/20094049

[0m

[32m━━━━━━━━━━━━━

[0m

[37m━━━━━━━

[0m

[1m0s

[0m 0us/step

[1m16367616/20094049

[0m

[32m━━━━━━━━━━━━━━━━

[0m

[37m━━━━

[0m

[1m0s

[0m 0us/step

[1m18743296/20094049

[0m

[32m━━━━━━━━━━━━━━━━━━

[0m

[37m━━

[0m

[1m0s

[0m 0us/step

[1m20094049/20094049

[0m

[32m━━━━━━━━━━━━━━━━━━━━

[0m

[37m

[0m

[1m1s

[0m 0us/step## Downloading data from https://raw.githubusercontent.com/hfawaz/cd-diagram/master/FordA/FordA_TEST.tsv

##

[1m 0/7364408

[0m

[37m━━━━━━━━━━━━━━━━━━━━

[0m

[1m0s

[0m 0s/step

[1m 122880/7364408

[0m

[37m━━━━━━━━━━━━━━━━━━━━

[0m

[1m3s

[0m 0us/step

[1m 589824/7364408

[0m

[32m━

[0m

[37m━━━━━━━━━━━━━━━━━━━

[0m

[1m1s

[0m 0us/step

[1m1277952/7364408

[0m

[32m━━━

[0m

[37m━━━━━━━━━━━━━━━━━

[0m

[1m0s

[0m 0us/step

[1m1720320/7364408

[0m

[32m━━━━

[0m

[37m━━━━━━━━━━━━━━━━

[0m

[1m0s

[0m 0us/step

[1m3342336/7364408

[0m

[32m━━━━━━━━━

[0m

[37m━━━━━━━━━━━

[0m

[1m0s

[0m 0us/step

[1m4112384/7364408

[0m

[32m━━━━━━━━━━━

[0m

[37m━━━━━━━━━

[0m

[1m0s

[0m 0us/step

[1m6004736/7364408

[0m

[32m━━━━━━━━━━━━━━━━

[0m

[37m━━━━

[0m

[1m0s

[0m 0us/step

[1m6823936/7364408

[0m

[32m━━━━━━━━━━━━━━━━━━

[0m

[37m━━

[0m

[1m0s

[0m 0us/step

[1m7364408/7364408

[0m

[32m━━━━━━━━━━━━━━━━━━━━

[0m

[37m

[0m

[1m1s

[0m 0us/step

str(keras3:::named_list(

x_train, y_train,

x_test, y_test

))## List of 4

## $ x_train: num [1:3601, 1:500] -0.797 0.805 0.728 -0.234 -0.171 ...

## $ y_train: int [1:3601, 1] -1 1 -1 -1 -1 1 1 1 1 1 ...

## $ x_test : num [1:1320, 1:500] -0.14 0.334 0.717 1.24 -1.159 ...

## $ y_test : int [1:1320, 1] -1 -1 -1 1 -1 1 -1 -1 1 1 ...Visualize the data

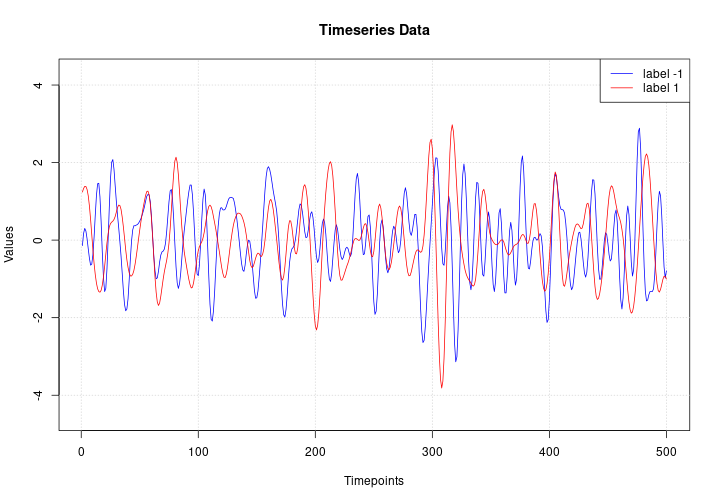

Here we visualize one timeseries example for each class in the dataset.

plot(NULL, main = "Timeseries Data",

xlab = "Timepoints", ylab = "Values",

xlim = c(1, ncol(x_test)),

ylim = range(x_test))

grid()

lines(x_test[match(-1, y_test), ], col = "blue")

lines(x_test[match( 1, y_test), ], col = "red")

legend("topright", legend=c("label -1", "label 1"), col=c("blue", "red"), lty=1)

Standardize the data

Our timeseries are already in a single length (500). However, their values are usually in various ranges. This is not ideal for a neural network; in general we should seek to make the input values normalized. For this specific dataset, the data is already z-normalized: each timeseries sample has a mean equal to zero and a standard deviation equal to one. This type of normalization is very common for timeseries classification problems, see Bagnall et al. (2016).

Note that the timeseries data used here are univariate, meaning we only have one channel per timeseries example. We will therefore transform the timeseries into a multivariate one with one channel using a simple reshaping via numpy. This will allow us to construct a model that is easily applicable to multivariate time series.

Finally, in order to use

sparse_categorical_crossentropy, we will have to count the

number of classes beforehand.

Now we shuffle the training set because we will be using the

validation_split option later when training.

c(x_train, y_train) %<-% listarrays::shuffle_rows(x_train, y_train)

# idx <- sample.int(nrow(x_train))

# x_train %<>% .[idx,, ,drop = FALSE]

# y_train %<>% .[idx, ,drop = FALSE]Standardize the labels to positive integers. The expected labels will then be 0 and 1.

y_train[y_train == -1L] <- 0L

y_test[y_test == -1L] <- 0LBuild a model

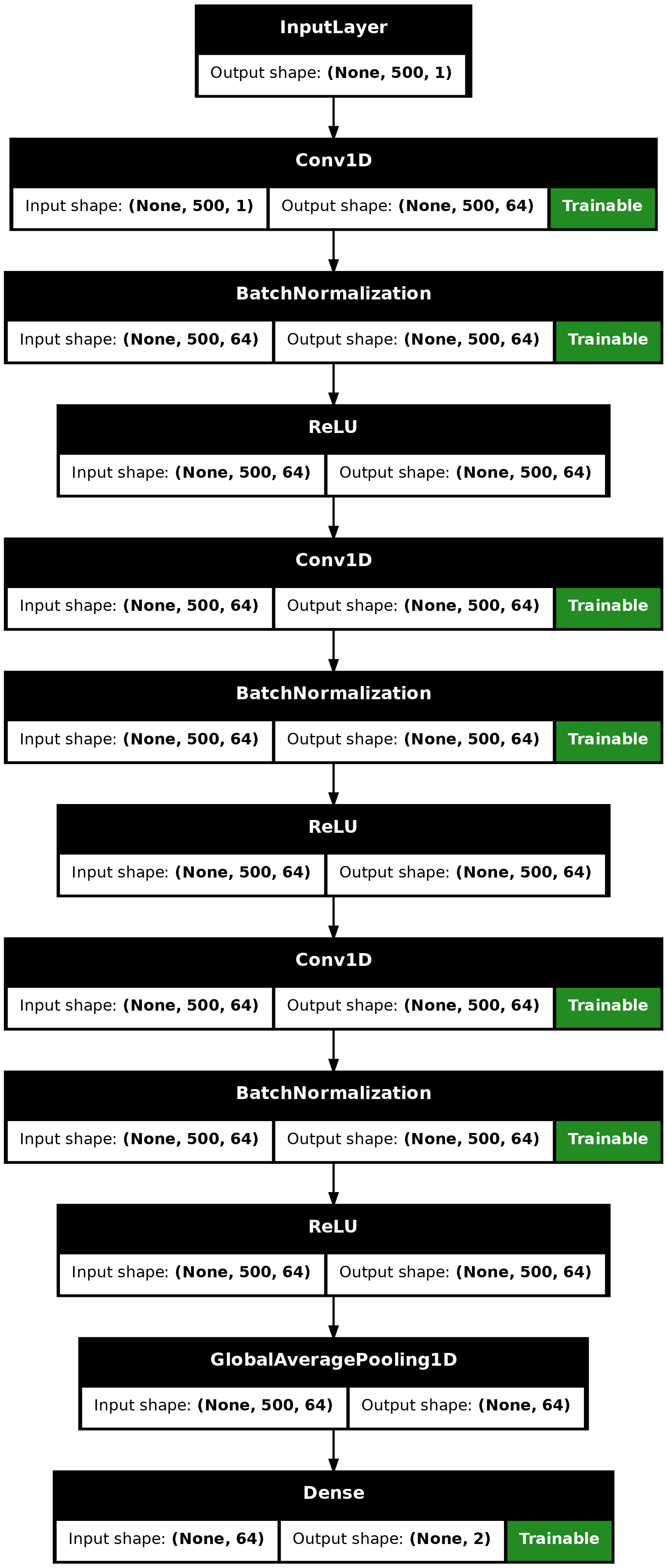

We build a Fully Convolutional Neural Network originally proposed in this paper. The implementation is based on the TF 2 version provided here. The following hyperparameters (kernel_size, filters, the usage of BatchNorm) were found via random search using KerasTuner.

make_model <- function(input_shape) {

inputs <- keras_input(input_shape)

outputs <- inputs |>

# conv1

layer_conv_1d(filters = 64, kernel_size = 3, padding = "same") |>

layer_batch_normalization() |>

layer_activation_relu() |>

# conv2

layer_conv_1d(filters = 64, kernel_size = 3, padding = "same") |>

layer_batch_normalization() |>

layer_activation_relu() |>

# conv3

layer_conv_1d(filters = 64, kernel_size = 3, padding = "same") |>

layer_batch_normalization() |>

layer_activation_relu() |>

# pooling

layer_global_average_pooling_1d() |>

# final output

layer_dense(num_classes, activation = "softmax")

keras_model(inputs, outputs)

}

model <- make_model(input_shape = dim(x_train)[-1])

model## Model: "functional"

## ┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━┓

## ┃ Layer (type) ┃ Output Shape ┃ Param # ┃ Trai… ┃

## ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━┩

## │ input_layer (InputLayer) │ (None, 500, 1) │ 0 │ - │

## ├─────────────────────────────┼───────────────────────┼────────────┼───────┤

## │ conv1d (Conv1D) │ (None, 500, 64) │ 256 │ Y │

## ├─────────────────────────────┼───────────────────────┼────────────┼───────┤

## │ batch_normalization │ (None, 500, 64) │ 256 │ Y │

## │ (BatchNormalization) │ │ │ │

## ├─────────────────────────────┼───────────────────────┼────────────┼───────┤

## │ re_lu (ReLU) │ (None, 500, 64) │ 0 │ - │

## ├─────────────────────────────┼───────────────────────┼────────────┼───────┤

## │ conv1d_1 (Conv1D) │ (None, 500, 64) │ 12,352 │ Y │

## ├─────────────────────────────┼───────────────────────┼────────────┼───────┤

## │ batch_normalization_1 │ (None, 500, 64) │ 256 │ Y │

## │ (BatchNormalization) │ │ │ │

## ├─────────────────────────────┼───────────────────────┼────────────┼───────┤

## │ re_lu_1 (ReLU) │ (None, 500, 64) │ 0 │ - │

## ├─────────────────────────────┼───────────────────────┼────────────┼───────┤

## │ conv1d_2 (Conv1D) │ (None, 500, 64) │ 12,352 │ Y │

## ├─────────────────────────────┼───────────────────────┼────────────┼───────┤

## │ batch_normalization_2 │ (None, 500, 64) │ 256 │ Y │

## │ (BatchNormalization) │ │ │ │

## ├─────────────────────────────┼───────────────────────┼────────────┼───────┤

## │ re_lu_2 (ReLU) │ (None, 500, 64) │ 0 │ - │

## ├─────────────────────────────┼───────────────────────┼────────────┼───────┤

## │ global_average_pooling1d │ (None, 64) │ 0 │ - │

## │ (GlobalAveragePooling1D) │ │ │ │

## ├─────────────────────────────┼───────────────────────┼────────────┼───────┤

## │ dense (Dense) │ (None, 2) │ 130 │ Y │

## └─────────────────────────────┴───────────────────────┴────────────┴───────┘

## Total params: 25,858 (101.01 KB)

## Trainable params: 25,474 (99.51 KB)

## Non-trainable params: 384 (1.50 KB)

plot(model, show_shapes = TRUE)

plot of chunk unnamed-chunk-9

Train the model

epochs <- 500

batch_size <- 32

callbacks <- c(

callback_model_checkpoint(

"best_model.keras", save_best_only = TRUE,

monitor = "val_loss"

),

callback_reduce_lr_on_plateau(

monitor = "val_loss", factor = 0.5,

patience = 20, min_lr = 0.0001

),

callback_early_stopping(

monitor = "val_loss", patience = 50,

verbose = 1

)

)

model |> compile(

optimizer = "adam",

loss = "sparse_categorical_crossentropy",

metrics = "sparse_categorical_accuracy"

)

history <- model |> fit(

x_train, y_train,

batch_size = batch_size,

epochs = epochs,

callbacks = callbacks,

validation_split = 0.2

)## Epoch 1/500

## 90/90 - 4s - 41ms/step - loss: 0.5310 - sparse_categorical_accuracy: 0.7198 - val_loss: 0.7948 - val_sparse_categorical_accuracy: 0.4896 - learning_rate: 1.0000e-03

## Epoch 2/500

## 90/90 - 1s - 13ms/step - loss: 0.4771 - sparse_categorical_accuracy: 0.7597 - val_loss: 0.9159 - val_sparse_categorical_accuracy: 0.4896 - learning_rate: 1.0000e-03

## Epoch 3/500

## 90/90 - 0s - 3ms/step - loss: 0.4717 - sparse_categorical_accuracy: 0.7573 - val_loss: 0.9025 - val_sparse_categorical_accuracy: 0.4896 - learning_rate: 1.0000e-03

## Epoch 4/500

## 90/90 - 0s - 2ms/step - loss: 0.4113 - sparse_categorical_accuracy: 0.8000 - val_loss: 0.8173 - val_sparse_categorical_accuracy: 0.4910 - learning_rate: 1.0000e-03

## Epoch 5/500

## 90/90 - 0s - 3ms/step - loss: 0.4164 - sparse_categorical_accuracy: 0.7920 - val_loss: 0.6884 - val_sparse_categorical_accuracy: 0.5146 - learning_rate: 1.0000e-03

## Epoch 6/500

## 90/90 - 0s - 4ms/step - loss: 0.3984 - sparse_categorical_accuracy: 0.8101 - val_loss: 0.4227 - val_sparse_categorical_accuracy: 0.8294 - learning_rate: 1.0000e-03

## Epoch 7/500

## 90/90 - 0s - 2ms/step - loss: 0.3878 - sparse_categorical_accuracy: 0.8132 - val_loss: 0.4749 - val_sparse_categorical_accuracy: 0.7018 - learning_rate: 1.0000e-03

## Epoch 8/500

## 90/90 - 0s - 3ms/step - loss: 0.3739 - sparse_categorical_accuracy: 0.8163 - val_loss: 0.3880 - val_sparse_categorical_accuracy: 0.8252 - learning_rate: 1.0000e-03

## Epoch 9/500

## 90/90 - 0s - 2ms/step - loss: 0.3684 - sparse_categorical_accuracy: 0.8292 - val_loss: 0.4363 - val_sparse_categorical_accuracy: 0.7767 - learning_rate: 1.0000e-03

## Epoch 10/500

## 90/90 - 0s - 2ms/step - loss: 0.3590 - sparse_categorical_accuracy: 0.8306 - val_loss: 0.5077 - val_sparse_categorical_accuracy: 0.7282 - learning_rate: 1.0000e-03

## Epoch 11/500

## 90/90 - 0s - 3ms/step - loss: 0.3638 - sparse_categorical_accuracy: 0.8247 - val_loss: 0.3574 - val_sparse_categorical_accuracy: 0.8405 - learning_rate: 1.0000e-03

## Epoch 12/500

## 90/90 - 0s - 2ms/step - loss: 0.3459 - sparse_categorical_accuracy: 0.8396 - val_loss: 0.8054 - val_sparse_categorical_accuracy: 0.6352 - learning_rate: 1.0000e-03

## Epoch 13/500

## 90/90 - 0s - 2ms/step - loss: 0.3350 - sparse_categorical_accuracy: 0.8552 - val_loss: 0.3838 - val_sparse_categorical_accuracy: 0.8155 - learning_rate: 1.0000e-03

## Epoch 14/500

## 90/90 - 0s - 2ms/step - loss: 0.3303 - sparse_categorical_accuracy: 0.8545 - val_loss: 0.4148 - val_sparse_categorical_accuracy: 0.7920 - learning_rate: 1.0000e-03

## Epoch 15/500

## 90/90 - 0s - 2ms/step - loss: 0.3228 - sparse_categorical_accuracy: 0.8507 - val_loss: 1.6007 - val_sparse_categorical_accuracy: 0.5132 - learning_rate: 1.0000e-03

## Epoch 16/500

## 90/90 - 0s - 2ms/step - loss: 0.3232 - sparse_categorical_accuracy: 0.8587 - val_loss: 0.5349 - val_sparse_categorical_accuracy: 0.7184 - learning_rate: 1.0000e-03

## Epoch 17/500

## 90/90 - 0s - 2ms/step - loss: 0.3119 - sparse_categorical_accuracy: 0.8656 - val_loss: 0.3336 - val_sparse_categorical_accuracy: 0.8669 - learning_rate: 1.0000e-03

## Epoch 18/500

## 90/90 - 0s - 2ms/step - loss: 0.3057 - sparse_categorical_accuracy: 0.8646 - val_loss: 0.5241 - val_sparse_categorical_accuracy: 0.7351 - learning_rate: 1.0000e-03

## Epoch 19/500

## 90/90 - 0s - 2ms/step - loss: 0.3042 - sparse_categorical_accuracy: 0.8691 - val_loss: 0.4023 - val_sparse_categorical_accuracy: 0.8128 - learning_rate: 1.0000e-03

## Epoch 20/500

## 90/90 - 0s - 2ms/step - loss: 0.3037 - sparse_categorical_accuracy: 0.8677 - val_loss: 0.4818 - val_sparse_categorical_accuracy: 0.7670 - learning_rate: 1.0000e-03

## Epoch 21/500

## 90/90 - 0s - 2ms/step - loss: 0.2834 - sparse_categorical_accuracy: 0.8795 - val_loss: 0.3017 - val_sparse_categorical_accuracy: 0.8696 - learning_rate: 1.0000e-03

## Epoch 22/500

## 90/90 - 0s - 1ms/step - loss: 0.2879 - sparse_categorical_accuracy: 0.8819 - val_loss: 0.4130 - val_sparse_categorical_accuracy: 0.8003 - learning_rate: 1.0000e-03

## Epoch 23/500

## 90/90 - 0s - 1ms/step - loss: 0.2778 - sparse_categorical_accuracy: 0.8844 - val_loss: 0.3149 - val_sparse_categorical_accuracy: 0.8599 - learning_rate: 1.0000e-03

## Epoch 24/500

## 90/90 - 0s - 2ms/step - loss: 0.2743 - sparse_categorical_accuracy: 0.8861 - val_loss: 0.3331 - val_sparse_categorical_accuracy: 0.8460 - learning_rate: 1.0000e-03

## Epoch 25/500

## 90/90 - 0s - 1ms/step - loss: 0.2654 - sparse_categorical_accuracy: 0.8892 - val_loss: 0.4245 - val_sparse_categorical_accuracy: 0.7892 - learning_rate: 1.0000e-03

## Epoch 26/500

## 90/90 - 0s - 1ms/step - loss: 0.3058 - sparse_categorical_accuracy: 0.8660 - val_loss: 0.3187 - val_sparse_categorical_accuracy: 0.8669 - learning_rate: 1.0000e-03

## Epoch 27/500

## 90/90 - 0s - 1ms/step - loss: 0.2619 - sparse_categorical_accuracy: 0.8965 - val_loss: 0.4151 - val_sparse_categorical_accuracy: 0.7850 - learning_rate: 1.0000e-03

## Epoch 28/500

## 90/90 - 0s - 1ms/step - loss: 0.2785 - sparse_categorical_accuracy: 0.8792 - val_loss: 0.3807 - val_sparse_categorical_accuracy: 0.8308 - learning_rate: 1.0000e-03

## Epoch 29/500

## 90/90 - 0s - 2ms/step - loss: 0.2594 - sparse_categorical_accuracy: 0.8878 - val_loss: 0.4138 - val_sparse_categorical_accuracy: 0.8128 - learning_rate: 1.0000e-03

## Epoch 30/500

## 90/90 - 0s - 2ms/step - loss: 0.2644 - sparse_categorical_accuracy: 0.8878 - val_loss: 0.5111 - val_sparse_categorical_accuracy: 0.7323 - learning_rate: 1.0000e-03

## Epoch 31/500

## 90/90 - 0s - 2ms/step - loss: 0.2653 - sparse_categorical_accuracy: 0.8924 - val_loss: 0.2788 - val_sparse_categorical_accuracy: 0.8863 - learning_rate: 1.0000e-03

## Epoch 32/500

## 90/90 - 0s - 2ms/step - loss: 0.2546 - sparse_categorical_accuracy: 0.8903 - val_loss: 0.2598 - val_sparse_categorical_accuracy: 0.8821 - learning_rate: 1.0000e-03

## Epoch 33/500

## 90/90 - 0s - 2ms/step - loss: 0.2408 - sparse_categorical_accuracy: 0.9062 - val_loss: 0.2632 - val_sparse_categorical_accuracy: 0.8793 - learning_rate: 1.0000e-03

## Epoch 34/500

## 90/90 - 0s - 2ms/step - loss: 0.2373 - sparse_categorical_accuracy: 0.9052 - val_loss: 0.2966 - val_sparse_categorical_accuracy: 0.8710 - learning_rate: 1.0000e-03

## Epoch 35/500

## 90/90 - 0s - 1ms/step - loss: 0.2560 - sparse_categorical_accuracy: 0.8903 - val_loss: 0.6435 - val_sparse_categorical_accuracy: 0.7309 - learning_rate: 1.0000e-03

## Epoch 36/500

## 90/90 - 0s - 1ms/step - loss: 0.2391 - sparse_categorical_accuracy: 0.9035 - val_loss: 0.2705 - val_sparse_categorical_accuracy: 0.8807 - learning_rate: 1.0000e-03

## Epoch 37/500

## 90/90 - 0s - 2ms/step - loss: 0.2384 - sparse_categorical_accuracy: 0.8993 - val_loss: 0.2720 - val_sparse_categorical_accuracy: 0.8738 - learning_rate: 1.0000e-03

## Epoch 38/500

## 90/90 - 0s - 2ms/step - loss: 0.2339 - sparse_categorical_accuracy: 0.9080 - val_loss: 0.9019 - val_sparse_categorical_accuracy: 0.7309 - learning_rate: 1.0000e-03

## Epoch 39/500

## 90/90 - 0s - 2ms/step - loss: 0.2404 - sparse_categorical_accuracy: 0.9038 - val_loss: 0.4007 - val_sparse_categorical_accuracy: 0.8294 - learning_rate: 1.0000e-03

## Epoch 40/500

## 90/90 - 0s - 2ms/step - loss: 0.2265 - sparse_categorical_accuracy: 0.9104 - val_loss: 0.6253 - val_sparse_categorical_accuracy: 0.7559 - learning_rate: 1.0000e-03

## Epoch 41/500

## 90/90 - 0s - 2ms/step - loss: 0.2159 - sparse_categorical_accuracy: 0.9174 - val_loss: 0.2307 - val_sparse_categorical_accuracy: 0.8974 - learning_rate: 1.0000e-03

## Epoch 42/500

## 90/90 - 0s - 2ms/step - loss: 0.2081 - sparse_categorical_accuracy: 0.9271 - val_loss: 0.5934 - val_sparse_categorical_accuracy: 0.7434 - learning_rate: 1.0000e-03

## Epoch 43/500

## 90/90 - 0s - 2ms/step - loss: 0.2135 - sparse_categorical_accuracy: 0.9153 - val_loss: 0.2809 - val_sparse_categorical_accuracy: 0.8613 - learning_rate: 1.0000e-03

## Epoch 44/500

## 90/90 - 0s - 2ms/step - loss: 0.2152 - sparse_categorical_accuracy: 0.9149 - val_loss: 0.3832 - val_sparse_categorical_accuracy: 0.8211 - learning_rate: 1.0000e-03

## Epoch 45/500

## 90/90 - 0s - 2ms/step - loss: 0.2060 - sparse_categorical_accuracy: 0.9233 - val_loss: 0.3182 - val_sparse_categorical_accuracy: 0.8682 - learning_rate: 1.0000e-03

## Epoch 46/500

## 90/90 - 0s - 2ms/step - loss: 0.2023 - sparse_categorical_accuracy: 0.9229 - val_loss: 0.2104 - val_sparse_categorical_accuracy: 0.9140 - learning_rate: 1.0000e-03

## Epoch 47/500

## 90/90 - 0s - 1ms/step - loss: 0.1855 - sparse_categorical_accuracy: 0.9309 - val_loss: 0.2882 - val_sparse_categorical_accuracy: 0.8877 - learning_rate: 1.0000e-03

## Epoch 48/500

## 90/90 - 0s - 1ms/step - loss: 0.1789 - sparse_categorical_accuracy: 0.9306 - val_loss: 0.4009 - val_sparse_categorical_accuracy: 0.8197 - learning_rate: 1.0000e-03

## Epoch 49/500

## 90/90 - 0s - 1ms/step - loss: 0.1643 - sparse_categorical_accuracy: 0.9417 - val_loss: 0.2160 - val_sparse_categorical_accuracy: 0.9071 - learning_rate: 1.0000e-03

## Epoch 50/500

## 90/90 - 0s - 1ms/step - loss: 0.1599 - sparse_categorical_accuracy: 0.9462 - val_loss: 0.6680 - val_sparse_categorical_accuracy: 0.6768 - learning_rate: 1.0000e-03

## Epoch 51/500

## 90/90 - 0s - 1ms/step - loss: 0.1623 - sparse_categorical_accuracy: 0.9420 - val_loss: 1.2733 - val_sparse_categorical_accuracy: 0.7254 - learning_rate: 1.0000e-03

## Epoch 52/500

## 90/90 - 0s - 2ms/step - loss: 0.1465 - sparse_categorical_accuracy: 0.9507 - val_loss: 1.1664 - val_sparse_categorical_accuracy: 0.6976 - learning_rate: 1.0000e-03

## Epoch 53/500

## 90/90 - 0s - 1ms/step - loss: 0.1453 - sparse_categorical_accuracy: 0.9465 - val_loss: 1.3677 - val_sparse_categorical_accuracy: 0.7074 - learning_rate: 1.0000e-03

## Epoch 54/500

## 90/90 - 0s - 1ms/step - loss: 0.1370 - sparse_categorical_accuracy: 0.9563 - val_loss: 0.2190 - val_sparse_categorical_accuracy: 0.9098 - learning_rate: 1.0000e-03

## Epoch 55/500

## 90/90 - 0s - 1ms/step - loss: 0.1319 - sparse_categorical_accuracy: 0.9563 - val_loss: 0.2376 - val_sparse_categorical_accuracy: 0.8932 - learning_rate: 1.0000e-03

## Epoch 56/500

## 90/90 - 0s - 2ms/step - loss: 0.1354 - sparse_categorical_accuracy: 0.9549 - val_loss: 0.1614 - val_sparse_categorical_accuracy: 0.9334 - learning_rate: 1.0000e-03

## Epoch 57/500

## 90/90 - 0s - 2ms/step - loss: 0.1298 - sparse_categorical_accuracy: 0.9590 - val_loss: 0.1651 - val_sparse_categorical_accuracy: 0.9376 - learning_rate: 1.0000e-03

## Epoch 58/500

## 90/90 - 0s - 2ms/step - loss: 0.1188 - sparse_categorical_accuracy: 0.9569 - val_loss: 0.2961 - val_sparse_categorical_accuracy: 0.8766 - learning_rate: 1.0000e-03

## Epoch 59/500

## 90/90 - 0s - 2ms/step - loss: 0.1295 - sparse_categorical_accuracy: 0.9545 - val_loss: 0.1905 - val_sparse_categorical_accuracy: 0.9209 - learning_rate: 1.0000e-03

## Epoch 60/500

## 90/90 - 0s - 1ms/step - loss: 0.1281 - sparse_categorical_accuracy: 0.9552 - val_loss: 0.1835 - val_sparse_categorical_accuracy: 0.9140 - learning_rate: 1.0000e-03

## Epoch 61/500

## 90/90 - 0s - 1ms/step - loss: 0.1176 - sparse_categorical_accuracy: 0.9646 - val_loss: 1.6040 - val_sparse_categorical_accuracy: 0.6741 - learning_rate: 1.0000e-03

## Epoch 62/500

## 90/90 - 0s - 1ms/step - loss: 0.1363 - sparse_categorical_accuracy: 0.9500 - val_loss: 2.0555 - val_sparse_categorical_accuracy: 0.6963 - learning_rate: 1.0000e-03

## Epoch 63/500

## 90/90 - 0s - 1ms/step - loss: 0.1358 - sparse_categorical_accuracy: 0.9483 - val_loss: 3.2565 - val_sparse_categorical_accuracy: 0.6352 - learning_rate: 1.0000e-03

## Epoch 64/500

## 90/90 - 0s - 2ms/step - loss: 0.1276 - sparse_categorical_accuracy: 0.9590 - val_loss: 0.3056 - val_sparse_categorical_accuracy: 0.8669 - learning_rate: 1.0000e-03

## Epoch 65/500

## 90/90 - 0s - 2ms/step - loss: 0.1189 - sparse_categorical_accuracy: 0.9590 - val_loss: 0.3571 - val_sparse_categorical_accuracy: 0.8530 - learning_rate: 1.0000e-03

## Epoch 66/500

## 90/90 - 0s - 2ms/step - loss: 0.1111 - sparse_categorical_accuracy: 0.9653 - val_loss: 0.1403 - val_sparse_categorical_accuracy: 0.9473 - learning_rate: 1.0000e-03

## Epoch 67/500

## 90/90 - 0s - 2ms/step - loss: 0.1120 - sparse_categorical_accuracy: 0.9635 - val_loss: 1.6044 - val_sparse_categorical_accuracy: 0.6477 - learning_rate: 1.0000e-03

## Epoch 68/500

## 90/90 - 0s - 2ms/step - loss: 0.1123 - sparse_categorical_accuracy: 0.9632 - val_loss: 0.6339 - val_sparse_categorical_accuracy: 0.7989 - learning_rate: 1.0000e-03

## Epoch 69/500

## 90/90 - 0s - 2ms/step - loss: 0.1150 - sparse_categorical_accuracy: 0.9611 - val_loss: 0.3409 - val_sparse_categorical_accuracy: 0.8585 - learning_rate: 1.0000e-03

## Epoch 70/500

## 90/90 - 0s - 1ms/step - loss: 0.1120 - sparse_categorical_accuracy: 0.9597 - val_loss: 0.1705 - val_sparse_categorical_accuracy: 0.9209 - learning_rate: 1.0000e-03

## Epoch 71/500

## 90/90 - 0s - 2ms/step - loss: 0.1070 - sparse_categorical_accuracy: 0.9646 - val_loss: 0.3976 - val_sparse_categorical_accuracy: 0.8488 - learning_rate: 1.0000e-03

## Epoch 72/500

## 90/90 - 0s - 2ms/step - loss: 0.1076 - sparse_categorical_accuracy: 0.9663 - val_loss: 0.1426 - val_sparse_categorical_accuracy: 0.9473 - learning_rate: 1.0000e-03

## Epoch 73/500

## 90/90 - 0s - 2ms/step - loss: 0.1025 - sparse_categorical_accuracy: 0.9677 - val_loss: 0.3274 - val_sparse_categorical_accuracy: 0.8613 - learning_rate: 1.0000e-03

## Epoch 74/500

## 90/90 - 0s - 2ms/step - loss: 0.1056 - sparse_categorical_accuracy: 0.9628 - val_loss: 0.2165 - val_sparse_categorical_accuracy: 0.9182 - learning_rate: 1.0000e-03

## Epoch 75/500

## 90/90 - 0s - 1ms/step - loss: 0.1303 - sparse_categorical_accuracy: 0.9524 - val_loss: 0.6197 - val_sparse_categorical_accuracy: 0.7282 - learning_rate: 1.0000e-03

## Epoch 76/500

## 90/90 - 0s - 1ms/step - loss: 0.1205 - sparse_categorical_accuracy: 0.9590 - val_loss: 0.2972 - val_sparse_categorical_accuracy: 0.8793 - learning_rate: 1.0000e-03

## Epoch 77/500

## 90/90 - 0s - 1ms/step - loss: 0.1061 - sparse_categorical_accuracy: 0.9632 - val_loss: 0.2158 - val_sparse_categorical_accuracy: 0.9182 - learning_rate: 1.0000e-03

## Epoch 78/500

## 90/90 - 0s - 1ms/step - loss: 0.0959 - sparse_categorical_accuracy: 0.9705 - val_loss: 0.1449 - val_sparse_categorical_accuracy: 0.9362 - learning_rate: 1.0000e-03

## Epoch 79/500

## 90/90 - 0s - 2ms/step - loss: 0.0995 - sparse_categorical_accuracy: 0.9674 - val_loss: 0.1231 - val_sparse_categorical_accuracy: 0.9598 - learning_rate: 1.0000e-03

## Epoch 80/500

## 90/90 - 0s - 1ms/step - loss: 0.1027 - sparse_categorical_accuracy: 0.9677 - val_loss: 0.5311 - val_sparse_categorical_accuracy: 0.7712 - learning_rate: 1.0000e-03

## Epoch 81/500

## 90/90 - 0s - 2ms/step - loss: 0.1012 - sparse_categorical_accuracy: 0.9677 - val_loss: 0.1622 - val_sparse_categorical_accuracy: 0.9348 - learning_rate: 1.0000e-03

## Epoch 82/500

## 90/90 - 0s - 1ms/step - loss: 0.0929 - sparse_categorical_accuracy: 0.9663 - val_loss: 0.1457 - val_sparse_categorical_accuracy: 0.9417 - learning_rate: 1.0000e-03

## Epoch 83/500

## 90/90 - 0s - 1ms/step - loss: 0.1008 - sparse_categorical_accuracy: 0.9663 - val_loss: 1.6042 - val_sparse_categorical_accuracy: 0.6879 - learning_rate: 1.0000e-03

## Epoch 84/500

## 90/90 - 0s - 1ms/step - loss: 0.0981 - sparse_categorical_accuracy: 0.9653 - val_loss: 0.1732 - val_sparse_categorical_accuracy: 0.9307 - learning_rate: 1.0000e-03

## Epoch 85/500

## 90/90 - 0s - 1ms/step - loss: 0.1006 - sparse_categorical_accuracy: 0.9674 - val_loss: 1.8495 - val_sparse_categorical_accuracy: 0.6976 - learning_rate: 1.0000e-03

## Epoch 86/500

## 90/90 - 0s - 1ms/step - loss: 0.1005 - sparse_categorical_accuracy: 0.9660 - val_loss: 0.1263 - val_sparse_categorical_accuracy: 0.9459 - learning_rate: 1.0000e-03

## Epoch 87/500

## 90/90 - 0s - 1ms/step - loss: 0.1054 - sparse_categorical_accuracy: 0.9632 - val_loss: 0.2135 - val_sparse_categorical_accuracy: 0.9140 - learning_rate: 1.0000e-03

## Epoch 88/500

## 90/90 - 0s - 2ms/step - loss: 0.1051 - sparse_categorical_accuracy: 0.9656 - val_loss: 1.3499 - val_sparse_categorical_accuracy: 0.6630 - learning_rate: 1.0000e-03

## Epoch 89/500

## 90/90 - 0s - 2ms/step - loss: 0.1037 - sparse_categorical_accuracy: 0.9615 - val_loss: 0.2271 - val_sparse_categorical_accuracy: 0.9029 - learning_rate: 1.0000e-03

## Epoch 90/500

## 90/90 - 0s - 2ms/step - loss: 0.0976 - sparse_categorical_accuracy: 0.9670 - val_loss: 0.7824 - val_sparse_categorical_accuracy: 0.7129 - learning_rate: 1.0000e-03

## Epoch 91/500

## 90/90 - 0s - 1ms/step - loss: 0.0995 - sparse_categorical_accuracy: 0.9677 - val_loss: 0.2369 - val_sparse_categorical_accuracy: 0.8918 - learning_rate: 1.0000e-03

## Epoch 92/500

## 90/90 - 0s - 2ms/step - loss: 0.0986 - sparse_categorical_accuracy: 0.9670 - val_loss: 0.1185 - val_sparse_categorical_accuracy: 0.9584 - learning_rate: 1.0000e-03

## Epoch 93/500

## 90/90 - 0s - 2ms/step - loss: 0.0957 - sparse_categorical_accuracy: 0.9670 - val_loss: 1.8619 - val_sparse_categorical_accuracy: 0.5270 - learning_rate: 1.0000e-03

## Epoch 94/500

## 90/90 - 0s - 2ms/step - loss: 0.0999 - sparse_categorical_accuracy: 0.9642 - val_loss: 0.4507 - val_sparse_categorical_accuracy: 0.8350 - learning_rate: 1.0000e-03

## Epoch 95/500

## 90/90 - 0s - 2ms/step - loss: 0.0939 - sparse_categorical_accuracy: 0.9653 - val_loss: 1.0529 - val_sparse_categorical_accuracy: 0.6893 - learning_rate: 1.0000e-03

## Epoch 96/500

## 90/90 - 0s - 2ms/step - loss: 0.1066 - sparse_categorical_accuracy: 0.9608 - val_loss: 0.1416 - val_sparse_categorical_accuracy: 0.9417 - learning_rate: 1.0000e-03

## Epoch 97/500

## 90/90 - 0s - 1ms/step - loss: 0.1068 - sparse_categorical_accuracy: 0.9618 - val_loss: 0.5324 - val_sparse_categorical_accuracy: 0.7961 - learning_rate: 1.0000e-03

## Epoch 98/500

## 90/90 - 0s - 1ms/step - loss: 0.0916 - sparse_categorical_accuracy: 0.9694 - val_loss: 0.8154 - val_sparse_categorical_accuracy: 0.7656 - learning_rate: 1.0000e-03

## Epoch 99/500

## 90/90 - 0s - 2ms/step - loss: 0.0942 - sparse_categorical_accuracy: 0.9639 - val_loss: 0.1314 - val_sparse_categorical_accuracy: 0.9542 - learning_rate: 1.0000e-03

## Epoch 100/500

## 90/90 - 0s - 1ms/step - loss: 0.0938 - sparse_categorical_accuracy: 0.9698 - val_loss: 2.2256 - val_sparse_categorical_accuracy: 0.6366 - learning_rate: 1.0000e-03

## Epoch 101/500

## 90/90 - 0s - 2ms/step - loss: 0.0853 - sparse_categorical_accuracy: 0.9750 - val_loss: 0.1660 - val_sparse_categorical_accuracy: 0.9376 - learning_rate: 1.0000e-03

## Epoch 102/500

## 90/90 - 0s - 2ms/step - loss: 0.0957 - sparse_categorical_accuracy: 0.9691 - val_loss: 0.2444 - val_sparse_categorical_accuracy: 0.8932 - learning_rate: 1.0000e-03

## Epoch 103/500

## 90/90 - 0s - 1ms/step - loss: 0.0949 - sparse_categorical_accuracy: 0.9660 - val_loss: 1.3661 - val_sparse_categorical_accuracy: 0.6519 - learning_rate: 1.0000e-03

## Epoch 104/500

## 90/90 - 0s - 1ms/step - loss: 0.1033 - sparse_categorical_accuracy: 0.9622 - val_loss: 0.2086 - val_sparse_categorical_accuracy: 0.9126 - learning_rate: 1.0000e-03

## Epoch 105/500

## 90/90 - 0s - 1ms/step - loss: 0.0981 - sparse_categorical_accuracy: 0.9663 - val_loss: 0.2216 - val_sparse_categorical_accuracy: 0.8988 - learning_rate: 1.0000e-03

## Epoch 106/500

## 90/90 - 0s - 1ms/step - loss: 0.0895 - sparse_categorical_accuracy: 0.9681 - val_loss: 2.2126 - val_sparse_categorical_accuracy: 0.6269 - learning_rate: 1.0000e-03

## Epoch 107/500

## 90/90 - 0s - 1ms/step - loss: 0.0939 - sparse_categorical_accuracy: 0.9670 - val_loss: 0.4274 - val_sparse_categorical_accuracy: 0.8128 - learning_rate: 1.0000e-03

## Epoch 108/500

## 90/90 - 0s - 1ms/step - loss: 0.0894 - sparse_categorical_accuracy: 0.9719 - val_loss: 0.2745 - val_sparse_categorical_accuracy: 0.8946 - learning_rate: 1.0000e-03

## Epoch 109/500

## 90/90 - 0s - 2ms/step - loss: 0.0937 - sparse_categorical_accuracy: 0.9722 - val_loss: 0.1248 - val_sparse_categorical_accuracy: 0.9487 - learning_rate: 1.0000e-03

## Epoch 110/500

## 90/90 - 0s - 1ms/step - loss: 0.0965 - sparse_categorical_accuracy: 0.9656 - val_loss: 0.5962 - val_sparse_categorical_accuracy: 0.7989 - learning_rate: 1.0000e-03

## Epoch 111/500

## 90/90 - 0s - 1ms/step - loss: 0.0825 - sparse_categorical_accuracy: 0.9726 - val_loss: 0.1671 - val_sparse_categorical_accuracy: 0.9307 - learning_rate: 1.0000e-03

## Epoch 112/500

## 90/90 - 0s - 1ms/step - loss: 0.0879 - sparse_categorical_accuracy: 0.9694 - val_loss: 0.1298 - val_sparse_categorical_accuracy: 0.9390 - learning_rate: 1.0000e-03

## Epoch 113/500

## 90/90 - 1s - 13ms/step - loss: 0.0785 - sparse_categorical_accuracy: 0.9757 - val_loss: 0.1539 - val_sparse_categorical_accuracy: 0.9348 - learning_rate: 5.0000e-04

## Epoch 114/500

## 90/90 - 0s - 1ms/step - loss: 0.0914 - sparse_categorical_accuracy: 0.9722 - val_loss: 0.1216 - val_sparse_categorical_accuracy: 0.9542 - learning_rate: 5.0000e-04

## Epoch 115/500

## 90/90 - 0s - 2ms/step - loss: 0.0754 - sparse_categorical_accuracy: 0.9740 - val_loss: 0.1167 - val_sparse_categorical_accuracy: 0.9431 - learning_rate: 5.0000e-04

## Epoch 116/500

## 90/90 - 0s - 1ms/step - loss: 0.0786 - sparse_categorical_accuracy: 0.9753 - val_loss: 0.1977 - val_sparse_categorical_accuracy: 0.9279 - learning_rate: 5.0000e-04

## Epoch 117/500

## 90/90 - 0s - 1ms/step - loss: 0.0758 - sparse_categorical_accuracy: 0.9736 - val_loss: 0.1724 - val_sparse_categorical_accuracy: 0.9431 - learning_rate: 5.0000e-04

## Epoch 118/500

## 90/90 - 0s - 2ms/step - loss: 0.0793 - sparse_categorical_accuracy: 0.9712 - val_loss: 0.1316 - val_sparse_categorical_accuracy: 0.9501 - learning_rate: 5.0000e-04

## Epoch 119/500

## 90/90 - 0s - 2ms/step - loss: 0.0781 - sparse_categorical_accuracy: 0.9750 - val_loss: 0.1054 - val_sparse_categorical_accuracy: 0.9598 - learning_rate: 5.0000e-04

## Epoch 120/500

## 90/90 - 0s - 2ms/step - loss: 0.0808 - sparse_categorical_accuracy: 0.9740 - val_loss: 0.1425 - val_sparse_categorical_accuracy: 0.9515 - learning_rate: 5.0000e-04

## Epoch 121/500

## 90/90 - 0s - 2ms/step - loss: 0.0761 - sparse_categorical_accuracy: 0.9753 - val_loss: 0.1359 - val_sparse_categorical_accuracy: 0.9431 - learning_rate: 5.0000e-04

## Epoch 122/500

## 90/90 - 0s - 1ms/step - loss: 0.0813 - sparse_categorical_accuracy: 0.9719 - val_loss: 0.1067 - val_sparse_categorical_accuracy: 0.9556 - learning_rate: 5.0000e-04

## Epoch 123/500

## 90/90 - 0s - 2ms/step - loss: 0.0778 - sparse_categorical_accuracy: 0.9767 - val_loss: 0.1328 - val_sparse_categorical_accuracy: 0.9528 - learning_rate: 5.0000e-04

## Epoch 124/500

## 90/90 - 0s - 2ms/step - loss: 0.0822 - sparse_categorical_accuracy: 0.9705 - val_loss: 0.1931 - val_sparse_categorical_accuracy: 0.9265 - learning_rate: 5.0000e-04

## Epoch 125/500

## 90/90 - 0s - 2ms/step - loss: 0.0797 - sparse_categorical_accuracy: 0.9719 - val_loss: 0.1279 - val_sparse_categorical_accuracy: 0.9459 - learning_rate: 5.0000e-04

## Epoch 126/500

## 90/90 - 0s - 1ms/step - loss: 0.0761 - sparse_categorical_accuracy: 0.9743 - val_loss: 0.2298 - val_sparse_categorical_accuracy: 0.9154 - learning_rate: 5.0000e-04

## Epoch 127/500

## 90/90 - 0s - 1ms/step - loss: 0.0740 - sparse_categorical_accuracy: 0.9802 - val_loss: 0.2257 - val_sparse_categorical_accuracy: 0.9223 - learning_rate: 5.0000e-04

## Epoch 128/500

## 90/90 - 0s - 2ms/step - loss: 0.0772 - sparse_categorical_accuracy: 0.9760 - val_loss: 0.1223 - val_sparse_categorical_accuracy: 0.9515 - learning_rate: 5.0000e-04

## Epoch 129/500

## 90/90 - 0s - 1ms/step - loss: 0.0760 - sparse_categorical_accuracy: 0.9764 - val_loss: 0.1443 - val_sparse_categorical_accuracy: 0.9501 - learning_rate: 5.0000e-04

## Epoch 130/500

## 90/90 - 0s - 1ms/step - loss: 0.0841 - sparse_categorical_accuracy: 0.9743 - val_loss: 0.1104 - val_sparse_categorical_accuracy: 0.9612 - learning_rate: 5.0000e-04

## Epoch 131/500

## 90/90 - 0s - 1ms/step - loss: 0.0697 - sparse_categorical_accuracy: 0.9785 - val_loss: 0.1062 - val_sparse_categorical_accuracy: 0.9584 - learning_rate: 5.0000e-04

## Epoch 132/500

## 90/90 - 0s - 1ms/step - loss: 0.0739 - sparse_categorical_accuracy: 0.9743 - val_loss: 0.1153 - val_sparse_categorical_accuracy: 0.9653 - learning_rate: 5.0000e-04

## Epoch 133/500

## 90/90 - 0s - 1ms/step - loss: 0.0704 - sparse_categorical_accuracy: 0.9781 - val_loss: 0.1186 - val_sparse_categorical_accuracy: 0.9473 - learning_rate: 5.0000e-04

## Epoch 134/500

## 90/90 - 0s - 1ms/step - loss: 0.0753 - sparse_categorical_accuracy: 0.9774 - val_loss: 0.2290 - val_sparse_categorical_accuracy: 0.9196 - learning_rate: 5.0000e-04

## Epoch 135/500

## 90/90 - 0s - 1ms/step - loss: 0.0734 - sparse_categorical_accuracy: 0.9743 - val_loss: 0.1498 - val_sparse_categorical_accuracy: 0.9501 - learning_rate: 5.0000e-04

## Epoch 136/500

## 90/90 - 0s - 1ms/step - loss: 0.0773 - sparse_categorical_accuracy: 0.9712 - val_loss: 0.1266 - val_sparse_categorical_accuracy: 0.9570 - learning_rate: 5.0000e-04

## Epoch 137/500

## 90/90 - 0s - 1ms/step - loss: 0.0807 - sparse_categorical_accuracy: 0.9740 - val_loss: 0.1143 - val_sparse_categorical_accuracy: 0.9639 - learning_rate: 5.0000e-04

## Epoch 138/500

## 90/90 - 0s - 1ms/step - loss: 0.0755 - sparse_categorical_accuracy: 0.9753 - val_loss: 0.2602 - val_sparse_categorical_accuracy: 0.9015 - learning_rate: 5.0000e-04

## Epoch 139/500

## 90/90 - 0s - 2ms/step - loss: 0.0807 - sparse_categorical_accuracy: 0.9750 - val_loss: 0.0994 - val_sparse_categorical_accuracy: 0.9626 - learning_rate: 5.0000e-04

## Epoch 140/500

## 90/90 - 0s - 1ms/step - loss: 0.0697 - sparse_categorical_accuracy: 0.9774 - val_loss: 0.2056 - val_sparse_categorical_accuracy: 0.9182 - learning_rate: 5.0000e-04

## Epoch 141/500

## 90/90 - 0s - 2ms/step - loss: 0.0701 - sparse_categorical_accuracy: 0.9753 - val_loss: 0.1829 - val_sparse_categorical_accuracy: 0.9362 - learning_rate: 5.0000e-04

## Epoch 142/500

## 90/90 - 0s - 1ms/step - loss: 0.0669 - sparse_categorical_accuracy: 0.9792 - val_loss: 0.1132 - val_sparse_categorical_accuracy: 0.9598 - learning_rate: 5.0000e-04

## Epoch 143/500

## 90/90 - 0s - 1ms/step - loss: 0.0751 - sparse_categorical_accuracy: 0.9767 - val_loss: 0.1953 - val_sparse_categorical_accuracy: 0.9223 - learning_rate: 5.0000e-04

## Epoch 144/500

## 90/90 - 0s - 1ms/step - loss: 0.0660 - sparse_categorical_accuracy: 0.9792 - val_loss: 0.1440 - val_sparse_categorical_accuracy: 0.9404 - learning_rate: 5.0000e-04

## Epoch 145/500

## 90/90 - 0s - 2ms/step - loss: 0.0673 - sparse_categorical_accuracy: 0.9764 - val_loss: 0.6545 - val_sparse_categorical_accuracy: 0.8100 - learning_rate: 5.0000e-04

## Epoch 146/500

## 90/90 - 0s - 2ms/step - loss: 0.0708 - sparse_categorical_accuracy: 0.9757 - val_loss: 0.1321 - val_sparse_categorical_accuracy: 0.9542 - learning_rate: 5.0000e-04

## Epoch 147/500

## 90/90 - 0s - 2ms/step - loss: 0.0733 - sparse_categorical_accuracy: 0.9764 - val_loss: 0.1479 - val_sparse_categorical_accuracy: 0.9487 - learning_rate: 5.0000e-04

## Epoch 148/500

## 90/90 - 0s - 2ms/step - loss: 0.0797 - sparse_categorical_accuracy: 0.9715 - val_loss: 0.3700 - val_sparse_categorical_accuracy: 0.8752 - learning_rate: 5.0000e-04

## Epoch 149/500

## 90/90 - 0s - 2ms/step - loss: 0.0709 - sparse_categorical_accuracy: 0.9760 - val_loss: 0.1170 - val_sparse_categorical_accuracy: 0.9584 - learning_rate: 5.0000e-04

## Epoch 150/500

## 90/90 - 0s - 2ms/step - loss: 0.0761 - sparse_categorical_accuracy: 0.9726 - val_loss: 0.1173 - val_sparse_categorical_accuracy: 0.9653 - learning_rate: 5.0000e-04

## Epoch 151/500

## 90/90 - 0s - 3ms/step - loss: 0.0728 - sparse_categorical_accuracy: 0.9719 - val_loss: 0.1596 - val_sparse_categorical_accuracy: 0.9334 - learning_rate: 5.0000e-04

## Epoch 152/500

## 90/90 - 0s - 2ms/step - loss: 0.0727 - sparse_categorical_accuracy: 0.9722 - val_loss: 0.1086 - val_sparse_categorical_accuracy: 0.9528 - learning_rate: 5.0000e-04

## Epoch 153/500

## 90/90 - 0s - 2ms/step - loss: 0.0704 - sparse_categorical_accuracy: 0.9771 - val_loss: 0.1305 - val_sparse_categorical_accuracy: 0.9487 - learning_rate: 5.0000e-04

## Epoch 154/500

## 90/90 - 0s - 2ms/step - loss: 0.0667 - sparse_categorical_accuracy: 0.9781 - val_loss: 0.1788 - val_sparse_categorical_accuracy: 0.9279 - learning_rate: 5.0000e-04

## Epoch 155/500

## 90/90 - 0s - 2ms/step - loss: 0.0702 - sparse_categorical_accuracy: 0.9764 - val_loss: 0.1109 - val_sparse_categorical_accuracy: 0.9501 - learning_rate: 5.0000e-04

## Epoch 156/500

## 90/90 - 0s - 1ms/step - loss: 0.0741 - sparse_categorical_accuracy: 0.9764 - val_loss: 0.1325 - val_sparse_categorical_accuracy: 0.9431 - learning_rate: 5.0000e-04

## Epoch 157/500

## 90/90 - 0s - 1ms/step - loss: 0.0750 - sparse_categorical_accuracy: 0.9722 - val_loss: 0.1820 - val_sparse_categorical_accuracy: 0.9348 - learning_rate: 5.0000e-04

## Epoch 158/500

## 90/90 - 0s - 1ms/step - loss: 0.0714 - sparse_categorical_accuracy: 0.9771 - val_loss: 0.1982 - val_sparse_categorical_accuracy: 0.9182 - learning_rate: 5.0000e-04

## Epoch 159/500

## 90/90 - 0s - 2ms/step - loss: 0.0732 - sparse_categorical_accuracy: 0.9750 - val_loss: 0.2841 - val_sparse_categorical_accuracy: 0.8988 - learning_rate: 5.0000e-04

## Epoch 160/500

## 90/90 - 0s - 1ms/step - loss: 0.0679 - sparse_categorical_accuracy: 0.9760 - val_loss: 0.1040 - val_sparse_categorical_accuracy: 0.9639 - learning_rate: 2.5000e-04

## Epoch 161/500

## 90/90 - 0s - 2ms/step - loss: 0.0649 - sparse_categorical_accuracy: 0.9785 - val_loss: 0.1281 - val_sparse_categorical_accuracy: 0.9459 - learning_rate: 2.5000e-04

## Epoch 162/500

## 90/90 - 0s - 2ms/step - loss: 0.0623 - sparse_categorical_accuracy: 0.9781 - val_loss: 0.1535 - val_sparse_categorical_accuracy: 0.9431 - learning_rate: 2.5000e-04

## Epoch 163/500

## 90/90 - 0s - 2ms/step - loss: 0.0588 - sparse_categorical_accuracy: 0.9813 - val_loss: 0.1049 - val_sparse_categorical_accuracy: 0.9667 - learning_rate: 2.5000e-04

## Epoch 164/500

## 90/90 - 0s - 2ms/step - loss: 0.0615 - sparse_categorical_accuracy: 0.9816 - val_loss: 0.1094 - val_sparse_categorical_accuracy: 0.9515 - learning_rate: 2.5000e-04

## Epoch 165/500

## 90/90 - 0s - 2ms/step - loss: 0.0594 - sparse_categorical_accuracy: 0.9809 - val_loss: 0.1116 - val_sparse_categorical_accuracy: 0.9515 - learning_rate: 2.5000e-04

## Epoch 166/500

## 90/90 - 0s - 1ms/step - loss: 0.0586 - sparse_categorical_accuracy: 0.9806 - val_loss: 0.1275 - val_sparse_categorical_accuracy: 0.9501 - learning_rate: 2.5000e-04

## Epoch 167/500

## 90/90 - 0s - 1ms/step - loss: 0.0605 - sparse_categorical_accuracy: 0.9795 - val_loss: 0.1516 - val_sparse_categorical_accuracy: 0.9417 - learning_rate: 2.5000e-04

## Epoch 168/500

## 90/90 - 0s - 1ms/step - loss: 0.0696 - sparse_categorical_accuracy: 0.9785 - val_loss: 0.1038 - val_sparse_categorical_accuracy: 0.9612 - learning_rate: 2.5000e-04

## Epoch 169/500

## 90/90 - 0s - 1ms/step - loss: 0.0612 - sparse_categorical_accuracy: 0.9799 - val_loss: 0.1354 - val_sparse_categorical_accuracy: 0.9598 - learning_rate: 2.5000e-04

## Epoch 170/500

## 90/90 - 0s - 1ms/step - loss: 0.0626 - sparse_categorical_accuracy: 0.9802 - val_loss: 0.1219 - val_sparse_categorical_accuracy: 0.9598 - learning_rate: 2.5000e-04

## Epoch 171/500

## 90/90 - 0s - 1ms/step - loss: 0.0582 - sparse_categorical_accuracy: 0.9816 - val_loss: 0.1627 - val_sparse_categorical_accuracy: 0.9417 - learning_rate: 2.5000e-04

## Epoch 172/500

## 90/90 - 0s - 2ms/step - loss: 0.0601 - sparse_categorical_accuracy: 0.9795 - val_loss: 0.1023 - val_sparse_categorical_accuracy: 0.9723 - learning_rate: 2.5000e-04

## Epoch 173/500

## 90/90 - 0s - 2ms/step - loss: 0.0587 - sparse_categorical_accuracy: 0.9813 - val_loss: 0.1001 - val_sparse_categorical_accuracy: 0.9639 - learning_rate: 2.5000e-04

## Epoch 174/500

## 90/90 - 0s - 2ms/step - loss: 0.0611 - sparse_categorical_accuracy: 0.9806 - val_loss: 0.1214 - val_sparse_categorical_accuracy: 0.9487 - learning_rate: 2.5000e-04

## Epoch 175/500

## 90/90 - 0s - 1ms/step - loss: 0.0630 - sparse_categorical_accuracy: 0.9788 - val_loss: 0.1159 - val_sparse_categorical_accuracy: 0.9556 - learning_rate: 2.5000e-04

## Epoch 176/500

## 90/90 - 0s - 1ms/step - loss: 0.0593 - sparse_categorical_accuracy: 0.9792 - val_loss: 0.1535 - val_sparse_categorical_accuracy: 0.9473 - learning_rate: 2.5000e-04

## Epoch 177/500

## 90/90 - 0s - 2ms/step - loss: 0.0579 - sparse_categorical_accuracy: 0.9844 - val_loss: 0.1511 - val_sparse_categorical_accuracy: 0.9473 - learning_rate: 2.5000e-04

## Epoch 178/500

## 90/90 - 0s - 2ms/step - loss: 0.0552 - sparse_categorical_accuracy: 0.9826 - val_loss: 0.2204 - val_sparse_categorical_accuracy: 0.9140 - learning_rate: 2.5000e-04

## Epoch 179/500

## 90/90 - 0s - 2ms/step - loss: 0.0572 - sparse_categorical_accuracy: 0.9799 - val_loss: 0.1048 - val_sparse_categorical_accuracy: 0.9681 - learning_rate: 2.5000e-04

## Epoch 180/500

## 90/90 - 0s - 2ms/step - loss: 0.0554 - sparse_categorical_accuracy: 0.9823 - val_loss: 0.1197 - val_sparse_categorical_accuracy: 0.9695 - learning_rate: 1.2500e-04

## Epoch 181/500

## 90/90 - 0s - 1ms/step - loss: 0.0575 - sparse_categorical_accuracy: 0.9837 - val_loss: 0.1206 - val_sparse_categorical_accuracy: 0.9653 - learning_rate: 1.2500e-04

## Epoch 182/500

## 90/90 - 0s - 1ms/step - loss: 0.0537 - sparse_categorical_accuracy: 0.9833 - val_loss: 0.1074 - val_sparse_categorical_accuracy: 0.9667 - learning_rate: 1.2500e-04

## Epoch 183/500

## 90/90 - 0s - 2ms/step - loss: 0.0571 - sparse_categorical_accuracy: 0.9809 - val_loss: 0.1052 - val_sparse_categorical_accuracy: 0.9695 - learning_rate: 1.2500e-04

## Epoch 184/500

## 90/90 - 0s - 2ms/step - loss: 0.0528 - sparse_categorical_accuracy: 0.9830 - val_loss: 0.1022 - val_sparse_categorical_accuracy: 0.9709 - learning_rate: 1.2500e-04

## Epoch 185/500

## 90/90 - 0s - 1ms/step - loss: 0.0550 - sparse_categorical_accuracy: 0.9830 - val_loss: 0.1042 - val_sparse_categorical_accuracy: 0.9653 - learning_rate: 1.2500e-04

## Epoch 186/500

## 90/90 - 0s - 2ms/step - loss: 0.0605 - sparse_categorical_accuracy: 0.9792 - val_loss: 0.1048 - val_sparse_categorical_accuracy: 0.9695 - learning_rate: 1.2500e-04

## Epoch 187/500

## 90/90 - 0s - 1ms/step - loss: 0.0539 - sparse_categorical_accuracy: 0.9847 - val_loss: 0.1090 - val_sparse_categorical_accuracy: 0.9639 - learning_rate: 1.2500e-04

## Epoch 188/500

## 90/90 - 0s - 2ms/step - loss: 0.0540 - sparse_categorical_accuracy: 0.9840 - val_loss: 0.1189 - val_sparse_categorical_accuracy: 0.9653 - learning_rate: 1.2500e-04

## Epoch 189/500

## 90/90 - 0s - 2ms/step - loss: 0.0514 - sparse_categorical_accuracy: 0.9854 - val_loss: 0.1599 - val_sparse_categorical_accuracy: 0.9431 - learning_rate: 1.2500e-04

## Epoch 189: early stoppingEvaluate model on test data

model <- load_model("best_model.keras")

results <- model |> evaluate(x_test, y_test)## 42/42 - 1s - 16ms/step - loss: 0.0998 - sparse_categorical_accuracy: 0.9652

str(results)## List of 2

## $ loss : num 0.0998

## $ sparse_categorical_accuracy: num 0.965

cat(

"Test accuracy: ", results$sparse_categorical_accuracy, "\n",

"Test loss: ", results$loss, "\n",

sep = ""

)## Test accuracy: 0.9651515

## Test loss: 0.0998451Plot the model’s training history

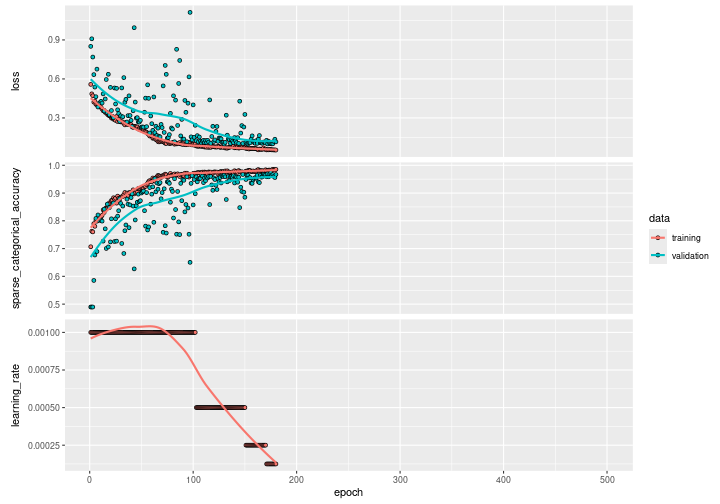

plot(history)

Plot just the training and validation accuracy:

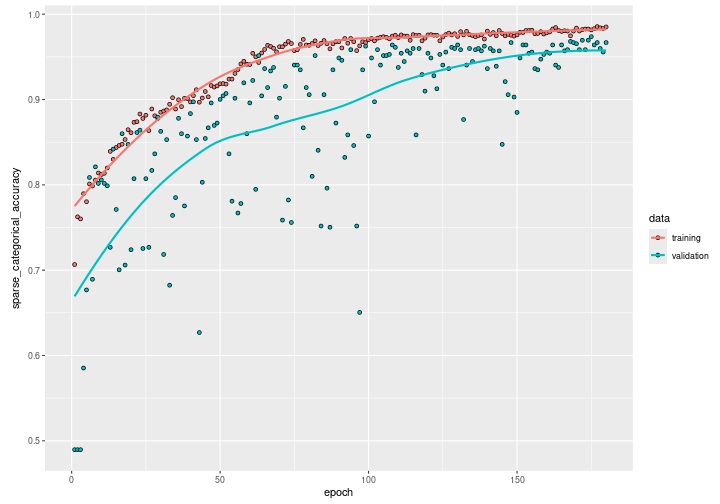

plot(history, metric = "sparse_categorical_accuracy") +

# scale x axis to actual number of epochs run before early stopping

ggplot2::xlim(0, length(history$metrics$loss))

We can see how the training accuracy reaches almost 0.95 after 100 epochs. However, by observing the validation accuracy we can see how the network still needs training until it reaches almost 0.97 for both the validation and the training accuracy after 200 epochs. Beyond the 200th epoch, if we continue on training, the validation accuracy will start decreasing while the training accuracy will continue on increasing: the model starts overfitting.